Facebook will be able to detect suicidal people using artificial intelligence

Facebook has begun using artificial intelligence technologies to help identify and assist members who are suicidal.

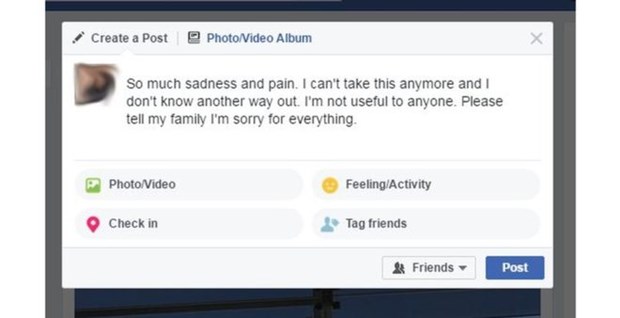

The world famous social network Facebook has developed an algorithms that can detect the warning signs of users’ messages and the comments they leave as responses.

Persons approved by Facebook’s human review team will try to support by offering ways to help those who are at risk of harm.

The Facebook’s artificial intelligence technologies are currently being tested in the US. Over the course of the month, Facebook founder and CEO Mark Zuckerberg said that artificial intelligence technologies that evaluate the messages could detect terrorists and objectionable content.

Facebook plans to help the people they consider suicide risk for their new target by directing them through the Messenger application to US mental health support organizations.

Pattern recognition algorithms that will evaluate word usage will detect people who have difficulty by taking models from previous examples. For example, expressions of sadness, pain, and grief may be evaluated as a signal.

US National Suicide Prevention Program director John Draper explains that Facebook praises this endeavor, but that the ultimate help can be achieved through referral from the referral.

Draper uses the words: “We can be so successful if we can develop support for those who suffer. But we should be able to achieve this without giving people the feeling of being involved in their lives. We should be able to pay attention to the personal areas of people and to evaluate personal dynamics well. ”

A 14-year-old girl who lives in Miami over the last few months has committed suicide live on Facebook, and this incident has gathered great reaction.

Facebook is also working on live apps where viewers can send alerts about a problematic situation.